04. TD Control: Sarsa

TD Control: Sarsa

Monte Carlo (MC) control methods require us to complete an entire episode of interaction before updating the Q-table. Temporal Difference (TD) methods will instead update the Q-table after every time step.

## Video

TD Control Sarsa Part 1

Watch the next video to learn about Sarsa (or Sarsa(0) ), one method for TD control.

## Video

TD Control Sarsa Part 2

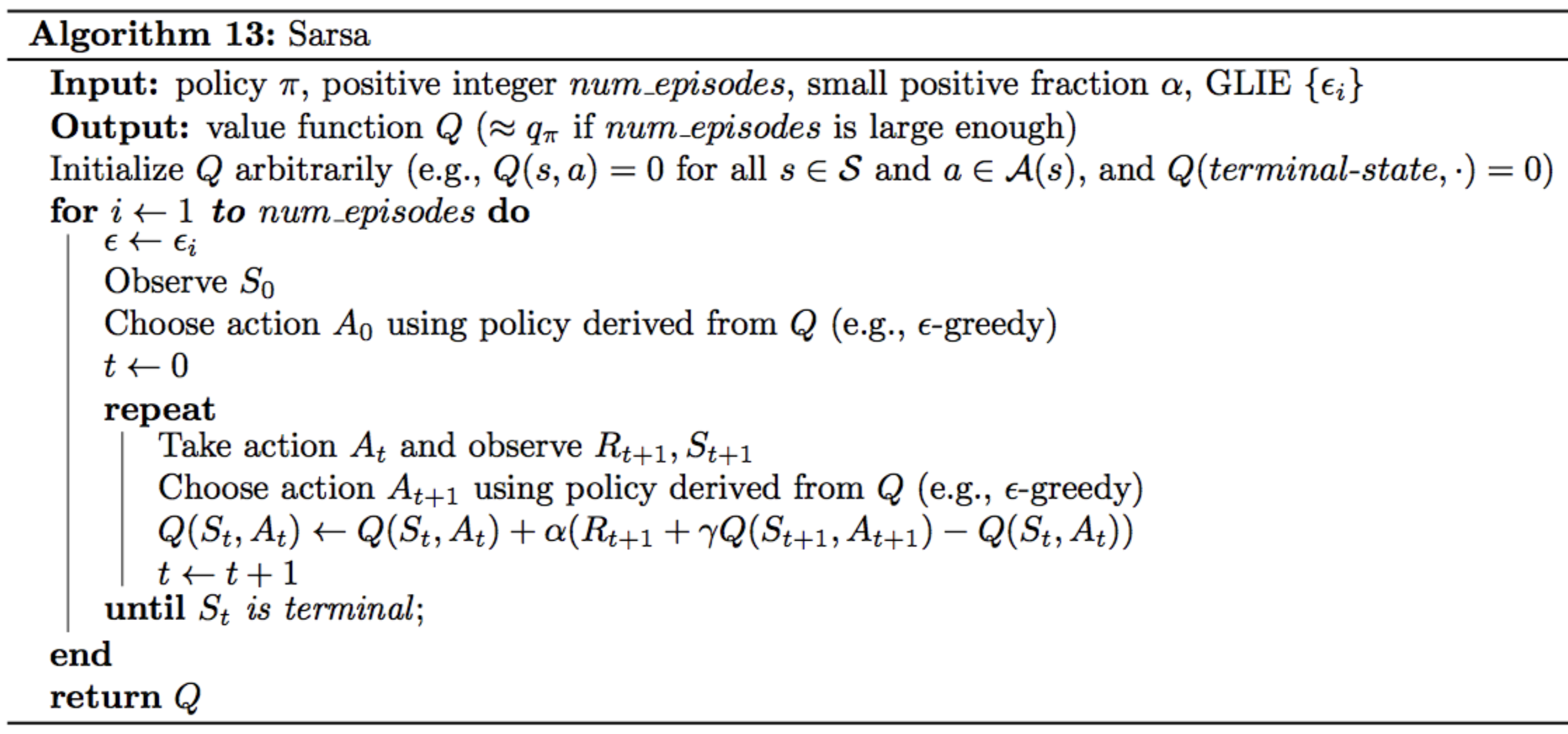

## Pseudocode

In the algorithm, the number of episodes the agent collects is equal to num_episodes . For every time step t\geq 0 , the agent:

- takes the action A_t (from the current state S_t ) that is \epsilon -greedy with respect to the Q-table,

- receives the reward R_{t+1} and next state S_{t+1} ,

- chooses the next action A_{t+1} (from the next state S_{t+1} ) that is \epsilon -greedy with respect to the Q-table,

- uses the information in the tuple ( S_t , A_t , R_{t+1} , S_{t+1} , A_{t+1} ) to update the entry Q(S_t, A_t) in the Q-table corresponding to the current state S_t and the action A_t .